Multi-modal System Design

When a user buys a Bose product, let’s make sure it acts, and reacts like one.

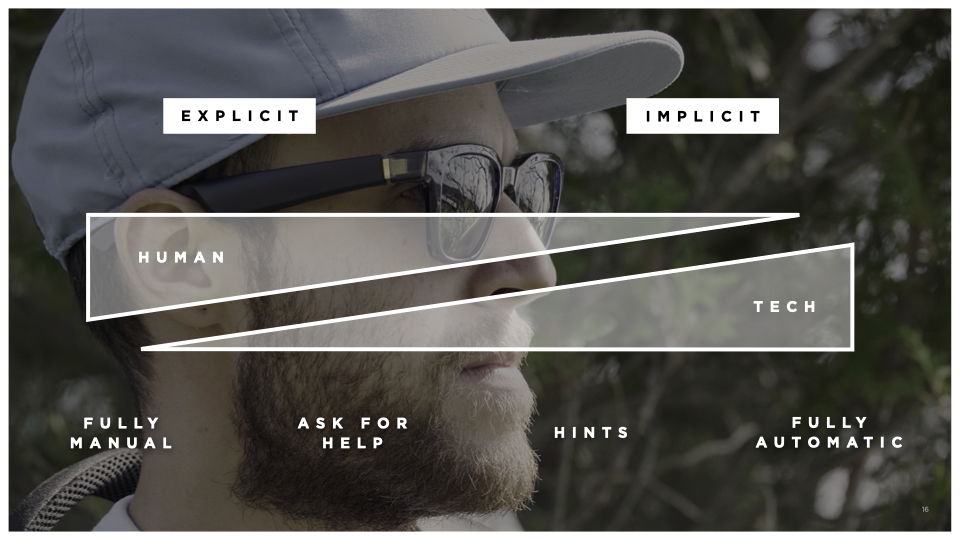

Over time, out User Interfaces have evolved from computer-readable, to human-readable, making interactions more natural, and implicit.

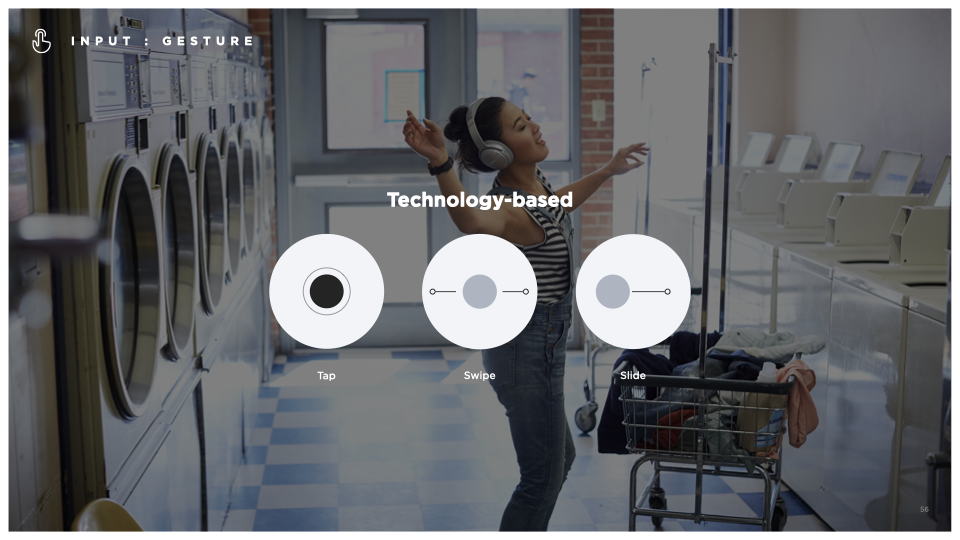

Gestures that are short in duration, and focused in are most easily accessible and executable — for instance, single-vector gestures are more easily afforded than gestures with multiple twists and turns.

Consider how you can use familiar metaphors to support gestures that feel natural to the user, like a flick or a swipe away from the user to exit something, or confirm you want it out of your focus.

Avoid supporting too many gestures on a single surface, or multiple surfaces in close proximity. Doing so will decrease the risk user confusion, and false positives. Prioritize primary controls and think about what other modalities may be best suited for secondary controls.

Affording a set of common gestures across our ecosystem allows our users the ability reapply their knowledge to new product, which builds their confidence in themselves, and in Bose.

When considering additions to the set of gestures, consider how that gesture could be applied to other ecosystem, rather than a single product.

Consider the positive in skeuomorphic reference to previously learned behavior. Although a 1-to-1 solution may not apply, we can use nuances of a previous interface to help a user make sense of a new interaction method.

As examples, users already use sliders to adjust volume or swipe away at things they don’t like, so creating gestures that follow a sliding scale for volume, or a flick to dismiss build upon those familiar metaphors.

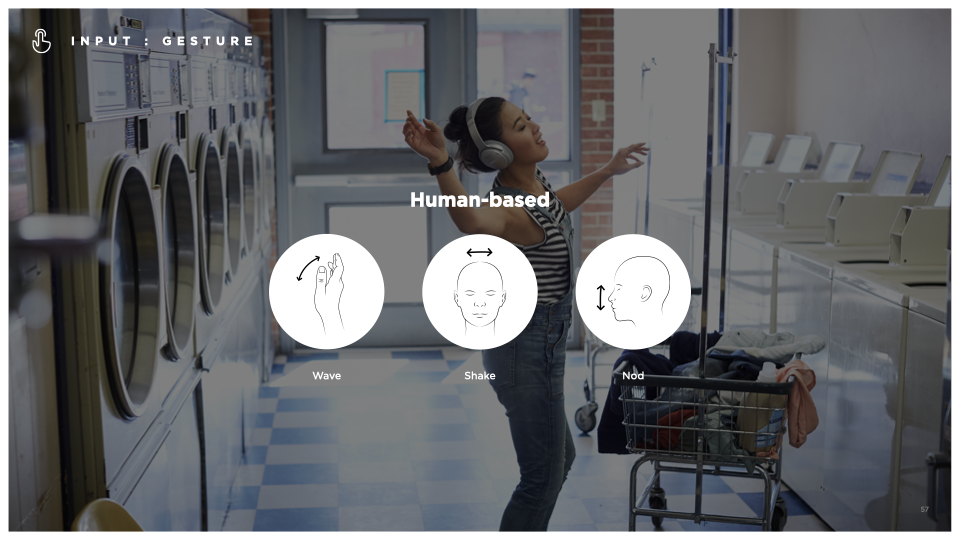

Human-based gestures take advantage of actions a user typically performs in conversation. For example, a head nod is a common action that has obvious connotations around acceptance. Gaze detection can also be employed to determine focus and attention.